Technology has always been the invisible hand that shapes the world. From the first sparks of human curiosity that led to the invention of fire to the age of artificial intelligence, technology has defined civilizations, rewritten economies, and reimagined what it means to be human. The story of technology is not just about machines or devices; it’s about human ambition — our endless desire to create, simplify, and transcend limitations.

In this comprehensive look into the world of technology, we’ll explore how it has evolved over the decades, its influence on our daily lives, the rise of artificial intelligence, the transformation of industries, and the philosophical questions it raises about the future of humanity.

The Early Digital Age: Laying the Foundations

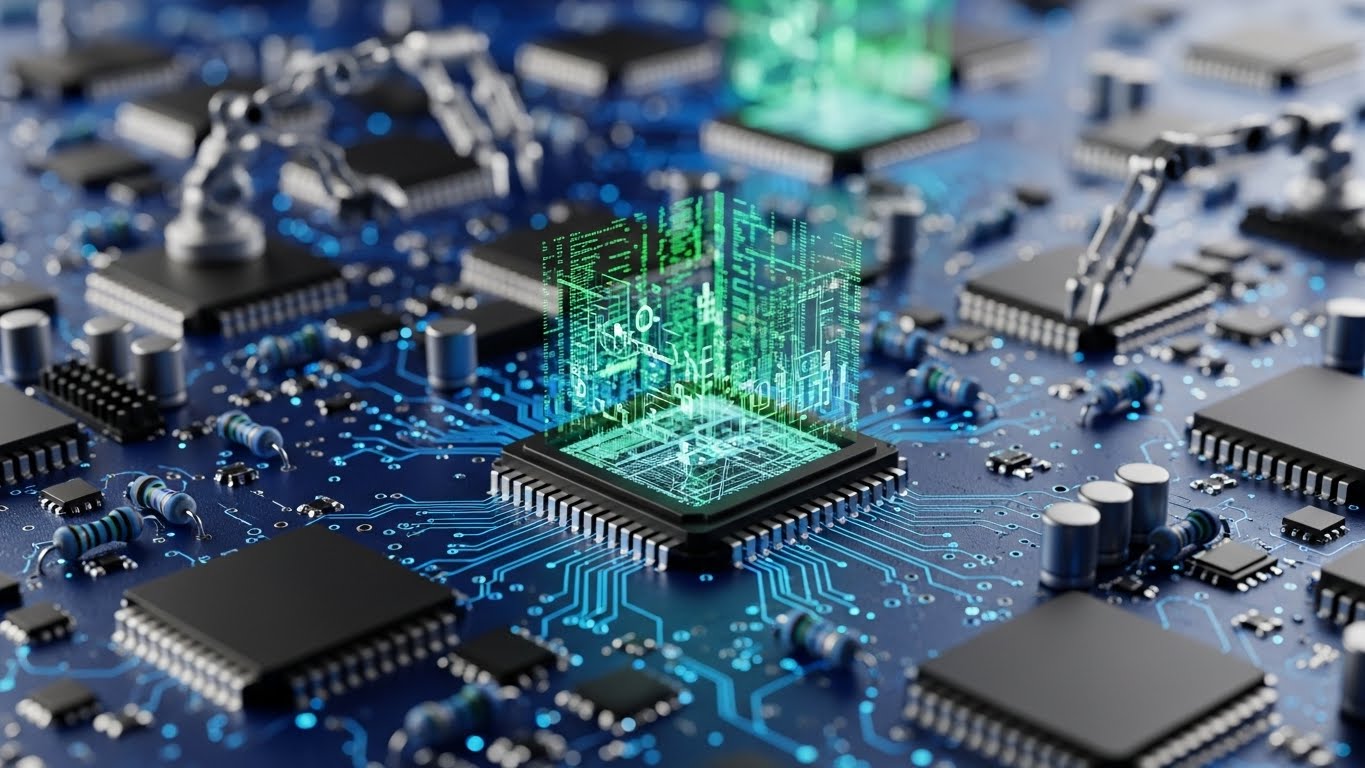

The digital age did not begin with smartphones or social media; it began quietly in the mid-20th century when computing was still in its infancy. The invention of the transistor in 1947 revolutionized electronics, making machines faster, smaller, and more efficient. By the 1950s and 60s, the first computers, once the size of entire rooms, began performing calculations that were previously unthinkable.

When the first microprocessor was developed in 1971 by Intel, the stage was set for a digital revolution. This small silicon chip would go on to power an entire generation of technological growth. Personal computers soon became the new frontier. In the 1980s, companies like Apple, IBM, and Microsoft transformed computing from a scientific tool into a household necessity. The idea of a computer in every home, once a fantasy, quickly became a reality.

The 1990s ushered in the internet — the connective tissue of the modern world. What began as a network for academic research grew into a vast web of information that connected people across continents. The World Wide Web democratized knowledge, reshaping education, communication, and commerce. It was the digital renaissance.

The Internet Era: Connecting the World

The arrival of the internet changed everything. Suddenly, information was not confined to books or libraries but was available at the click of a mouse. Businesses adapted to online platforms, emails replaced letters, and digital marketing became the heartbeat of modern commerce. The dot-com boom of the late 1990s marked a time of wild experimentation and innovation. Though many early internet startups failed, they laid the groundwork for the giants that would follow.

In the early 2000s, the rise of broadband connections made the internet faster and more accessible. Platforms like YouTube, Facebook, and Twitter emerged, changing the way people communicated and consumed information. The web became not just a tool but an extension of human identity. People began living part of their lives online, creating digital personas, sharing experiences, and building communities that transcended physical boundaries.

The internet also redefined business. E-commerce became a new model of success, with companies like Amazon showing the world that the future of retail was digital. Online education, streaming services, and remote work all sprouted from the connectivity that the web enabled. The concept of a global village became reality — the world was more connected than ever before.

The Smartphone Revolution: The Computer in Your Pocket

If the internet connected the world, smartphones personalized it. When Apple launched the first iPhone in 2007, it was more than a new gadget; it was a paradigm shift. A phone was no longer just for calls or messages — it became a camera, a music player, a computer, and a window into the digital universe. The smartphone condensed the entire digital world into the palm of a hand.

This shift altered how people interacted with technology. Apps became the new ecosystem of innovation. Navigation, banking, shopping, dating, learning, and even healthcare began to rely on mobile applications. The rise of mobile operating systems like iOS and Android opened the floodgates for developers to innovate on a scale never seen before.

Smartphones also transformed media consumption. Social media apps became the new town squares, where ideas spread faster than any newspaper could manage. However, this constant connectivity also had a darker side — digital addiction, misinformation, and privacy erosion became the new challenges of the digital era.

The Cloud: The Invisible Backbone of Modern Technology

As devices became smarter and the internet became faster, another innovation quietly reshaped technology — cloud computing. The concept of the cloud was simple yet revolutionary: instead of storing data locally on a device, information could be stored and accessed remotely from servers located anywhere in the world.

This changed everything about how businesses and individuals managed information. The cloud made it possible for teams to collaborate in real-time across continents, for massive companies to run complex software without physical infrastructure, and for users to back up photos, documents, and media instantly.

Streaming services like Netflix and Spotify exist because of cloud technology. So do enterprise solutions like Google Workspace, Microsoft 365, and countless others. The cloud effectively became the backbone of the modern digital ecosystem — invisible, yet indispensable.

Artificial Intelligence: The Birth of a Thinking Machine

Artificial Intelligence, or AI, has always been a dream of scientists and philosophers. The idea of creating machines that could think, learn, and make decisions has fascinated humanity for centuries. But it was only in recent years that this dream began to take tangible form.

Early AI systems were limited to simple problem-solving tasks, but the rise of machine learning — algorithms that learn from data — changed everything. AI could now analyze vast amounts of information, recognize patterns, and make predictions. From voice assistants like Siri and Alexa to recommendation engines on Netflix and YouTube, AI subtly integrated into everyday life.

The real turning point came with deep learning and neural networks. These systems mimic the human brain’s structure, allowing machines to perform complex cognitive tasks like image recognition, natural language processing, and even creativity. The rise of large language models marked another milestone — computers could now understand and generate human-like text, enabling chatbots, translation tools, and content creation systems.

AI is now the driving force behind automation, robotics, autonomous vehicles, healthcare diagnostics, and financial forecasting. It is reshaping industries and redefining what human labor means in the digital age.

The Internet of Things: A World of Connected Devices

Imagine a refrigerator that orders groceries when supplies run low, or a thermostat that adjusts itself based on your schedule. This is not science fiction — it’s the Internet of Things (IoT). IoT refers to the vast network of physical objects embedded with sensors and connected through the internet.

This interconnected web of devices has turned ordinary objects into smart tools. Homes have become intelligent ecosystems, cities are adopting smart infrastructure, and industries are optimizing operations with real-time monitoring. From wearable health trackers to autonomous farming equipment, IoT has found applications in nearly every sector.

However, with great connectivity comes great vulnerability. Each connected device represents a potential entry point for cyberattacks, making cybersecurity one of the most critical issues in the IoT era.

Cybersecurity: Protecting the Digital Frontier

As the digital world expanded, so did the threats. The same networks that empower humanity also attract malicious actors seeking to exploit them. Cybersecurity has evolved into a full-fledged industry focused on protecting data, privacy, and infrastructure.

In the early days, viruses and worms were the main concerns. Today, the threats are far more complex — ransomware, phishing, identity theft, and state-sponsored cyberattacks are daily realities. The rise of cloud computing, IoT, and AI has only expanded the attack surface.

Modern cybersecurity relies heavily on encryption, authentication, and proactive monitoring. But technology alone is not enough. Human awareness plays an equally important role. The weakest link in any system is often the user who clicks a malicious link or fails to secure their passwords. As our lives become more digitized, cybersecurity literacy is becoming as essential as reading and writing.

Automation and the Future of Work

One of the most profound impacts of technology is on the world of work. Automation — driven by AI, robotics, and data analytics — is transforming industries from manufacturing to finance. Machines now handle repetitive and dangerous tasks with precision and efficiency, freeing humans to focus on creativity, strategy, and innovation.

Yet this transition is not without anxiety. Many fear that automation will replace jobs, leading to widespread unemployment. History, however, shows that while technology eliminates some jobs, it also creates new ones. The challenge lies in adapting education and policy to prepare workers for this new era of digital collaboration.

The rise of remote work is another major shift. Accelerated by global events, remote work has become a norm rather than an exception. Digital tools like Zoom, Slack, and collaborative software have made it possible for people to work from anywhere, redefining the concept of a workplace.

The Green Side of Tech: Sustainability and Innovation

Technology is not only reshaping economies and lifestyles but also playing a crucial role in addressing global challenges like climate change. Green technology — innovations designed to reduce environmental impact — is becoming a central theme in modern development.

Renewable energy systems, electric vehicles, smart grids, and sustainable manufacturing are all powered by technological advancement. Artificial intelligence and big data are being used to optimize resource consumption, monitor ecosystems, and predict environmental trends.

Tech companies are also increasingly focusing on sustainable design, from recyclable materials to energy-efficient data centers. The idea of a circular economy, where resources are reused rather than discarded, is gaining traction. The intersection of technology and sustainability offers hope that innovation can lead not only to progress but also to preservation.

The Ethics of Technology: The Human Dilemma

As technology grows more powerful, so do the ethical questions surrounding it. Should AI have rights? How much privacy are we willing to sacrifice for convenience? Who controls the data that defines our digital identities? These questions do not have simple answers.

The use of facial recognition, genetic engineering, and data tracking has raised concerns about surveillance and control. Social media algorithms, designed to engage users, have also been criticized for spreading misinformation and deepening social divides. As machines become capable of making decisions, the responsibility for those decisions becomes a complex moral issue.

Ethical frameworks and regulations are beginning to emerge, but the pace of innovation often outstrips legislation. The challenge of the 21st century is not just to create powerful technologies but to ensure they serve humanity rather than dominate it.

The Future: Where Do We Go From Here?

Looking ahead, technology shows no signs of slowing down. Quantum computing promises to revolutionize data processing by solving problems that classical computers could never handle. Biotechnology is merging with information technology, leading to innovations in personalized medicine, gene editing, and human augmentation.

The boundaries between the physical and digital worlds are blurring with the rise of augmented and virtual reality. The concept of the metaverse — a persistent, shared digital space — could redefine social interaction, entertainment, and even the economy.

Space exploration, powered by private companies and AI-driven systems, is entering a new golden age. Humans are once again looking to the stars, not just as observers but as pioneers.

Yet, amid all these advancements, the essence of technology remains human. It is the reflection of our creativity, our curiosity, and our courage to build something better. The future will be shaped not by the machines we create but by the values we instill in them.

Conclusion: Technology as a Mirror of Humanity

Technology is more than wires, code, or silicon — it is a mirror reflecting human ambition, brilliance, and flaws. It has given us tools to cure diseases, connect continents, and unlock the mysteries of the universe. It has also challenged our ethics, strained our attention, and reshaped our relationships.

As we stand on the edge of an age defined by artificial intelligence and automation, one truth remains clear: technology is what we make of it. It can be a force for division or a bridge to unity, a tool for control or a path to freedom. The responsibility lies not in the machines but in their creators.

The evolution of technology is the story of humanity itself — a story of endless reinvention, driven by the hope that the next invention will bring us closer to understanding not just the world, but ourselves.